Today humanity stands on the brink of a new era, which brings both incredible opportunities and serious threats. With such reflections, a correspondent from the "Russian Gazette" talks with Askar Akayev, a professor at Moscow State University and a foreign member of the Russian Academy of Sciences.

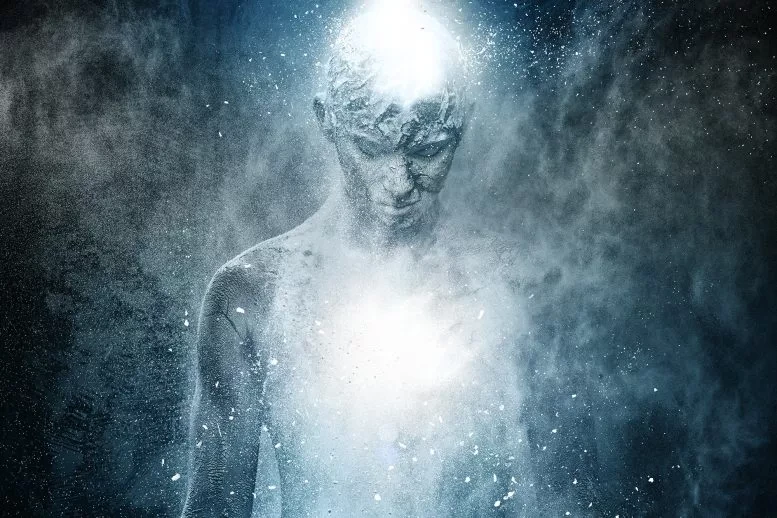

Askar Akayevich, in your recent article with professors Ilya Ilyin and Andrey Korotaev, published in the journal "Herald of the Russian Academy of Sciences," you mention that humanity may face the so-called singularity in the coming years. The term "singularity" frightens many, as it is associated with "black holes" from which it is impossible to escape. What are the real threats of this singularity?

Askar Akayev: First of all, it is worth noting that many astrophysicists are now ready to rethink the concept of "black holes," but that is a separate topic. We are talking about technological singularity, which may occur between 2027 and 2029, when artificial intelligence reaches a level comparable to human intelligence. Such AI will be capable of performing tasks that are currently only possible for highly qualified specialists.

However, what is most impressive is not the AI itself, but the fact that its emergence coincides with a number of global evolutionary processes, which suggests some kind of predestination.

This sounds almost mystical. Can you explain what you mean by technological singularity?

Askar Akayev: This is a period of uncertainty when technologies will develop so rapidly that we will not be able to predict how our society will change. According to our calculations, this strange phase will begin in 2027-2029 and last from 15 to 20 years.

And, as I understand it, it is artificial intelligence that will be the engine that launches this process?

Askar Akayev: Yes, and it is much more complex and interesting than that. The timing of the emergence of human-level AI coincides with changes in several global evolutionary processes, making the situation unique.

Let’s start with demographics. At the end of the 19th and the beginning of the 20th century, humanity experienced a sharp increase in population that lasted several decades. The British scholar Malthus predicted that the population would grow indefinitely, ultimately leading to global famine. Mathematicians confirmed his theories, indicating that the number of people could grow to unimaginable scales, predicting particularly critical moments, such as the year 2026, when, according to their calculations, something catastrophic was supposed to happen. Later, these forecasts were pushed to 2027.

However, since the early 1960s, without any external factors, birth rates have begun to steadily decline. This change signaled that the population would continue to grow, but the rate of growth would slow down.

What consequences will this slowdown lead to?

Askar Akayev: According to the forecasts of Sergey Petrovich Kapitsa, by 2100 the population will reach 11.4 billion, while our own calculations with Academician Sadovnichy show that by the middle of the century this number will not exceed 9 billion, and by the end of the 21st century it will decrease to 7.9 billion due to the integration of intelligent systems into everyday life.

However, the slowdown is not only observed in demographics. Our astrophysicist Alexander Panov and American scientist Raymond Kurzweil independently showed that the slowdown also applies to the macroevolution of all aspects of life, including the evolution of the Universe.

Professor Andrey Korotaev mathematically proved that three evolutionary processes that had been accelerating began to slow down and converged at one point in 2027-2029.

How is such a coincidence possible? The probability of this seems extremely low.

Askar Akayev: Nevertheless, the formulas show such a result. Moreover, at this moment, when humanity has reached the limit of its evolutionary growth, AI emerges, which begins to develop actively. This could become a catalyst for evolution, accelerating it many times over.

Thus, AI could play the role of a savior in the context of evolutionary slowdown. The emergence of such AI at a moment of crisis is a plot that could only be written in a science fiction novel. Perhaps this is the result of some higher power that programmed such coincidences.

Askar Akayev: Honestly, I do not have an answer to this question. Let’s hope that science will find it over time.

Returning to the singularity expected in 2027-2029, why can’t we predict the laws that will apply at that time? Why is there such uncertainty?

Askar Akayev: We do not know how the interaction between AI and humans will occur. Two scenarios are possible: either we will develop alongside AI, or it will take control.

Elon Musk and Sam Altman, the head of the company that developed ChatGPT, believe that by 2030 a powerful superintelligence will emerge that will surpass human intelligence, and humanity will no longer be needed.

Askar Akayev: This resembles the concepts of "Gaia" and "Medea." The former suggests that humanity was created to assist the Almighty in managing the world, while the latter suggests that we will destroy ourselves by handing over the baton to AI. We do not know which of the concepts will become a reality. Both options have their supporters.

It all depends on how AI is implemented. If it works in symbiosis with humans and under control, it will pave the way for "Gaia." In this case, we can expect amazing breakthroughs in various fields. However, if AI gets out of control and becomes a rival to humans, the "Medea" scenario will occur, and the degradation of humanity will become inevitable. The next 15-20 years will be a time of both great opportunities and significant risks, making this period unique in history.

Interestingly, throughout its history, humanity has almost always waged wars. Now discussions about a Third World War are becoming increasingly relevant. Perhaps it is precisely superintelligence that can stop human conflicts, as it is important for it to live, not perish along with humans.

Askar Akayev: However, according to this logic, AI may decide that the best solution is to eliminate those who create problems for its existence. This could lead to the realization of the "Medea" scenario.

Thus, the task of science is to stop the proponents of superintelligence. But is this possible? All countries are now proclaiming that "AI is our future!" And an arms race in technology has begun, where the winners will rule the world. In this situation, it is difficult to understand where "Gaia" is and where "Medea" is.

Askar Akayev: The main threat of modern AI systems is that they are a "black box." When a person controls AI, they have the final say in decision-making, but if everything is handed over to such a "box," it becomes dangerous.

Is there a way out? Yes, there is. The main principle in creating AI should be this: the more mathematics in the model, the less "black box." Modern systems are developed with clear cause-and-effect relationships. These ideas were proposed back in the 1950s and 1960s by great mathematicians Andrey Kolmogorov and Vladimir Arnold. Based on their work, neural networks are created that are used to solve scientific and technological problems while adhering to cause-and-effect relationships.

Thus, if we understand how AI thinks, if the "black box" becomes transparent, then it is under control. Why, then, do many fear "Medea"?

Askar Akayev: Unfortunately, only human-level AI will be under control, while superintelligence will act independently of humans. Humanity needs to agree on implementing a "genetic code" of friendliness and symbiosis with humans into neural networks to pass this "gene" to future generations of AI and ensure compliance with such a fundamental law of their behavior.

Information from "RG"

The term "singularity" comes from the Latin word "singularis," which means "unique, special." Today, there is no single definition of this term, as it is used in various fields and has its meaning in each of them. For example, in philosophy, singularity is an event that facilitates what seemed impossible.

Technological singularity implies that the development of science and technology will become uncontrollable and irreversible, leading to significant changes in human civilization.

Cosmological singularity is the proposed state of the Universe at the moment of the Big Bang.

Gravitational singularity is a theoretical concept describing a place where the fabric of space in the Universe tears and ceases to exist.