Training generative AI is based on works of art and literature created over the centuries. However, a question arises: what will happen when artificial intelligence begins to learn from its own results? A recent study offers some answers to this question.

In January 2026, a team of AI researchers, including Arend Hintze, Frida Proschinger Østrem, and Jori Schossau, published the results of their experiment, in which they studied how generative systems behave when given the opportunity to act independently—generating and interpreting their results without human intervention.

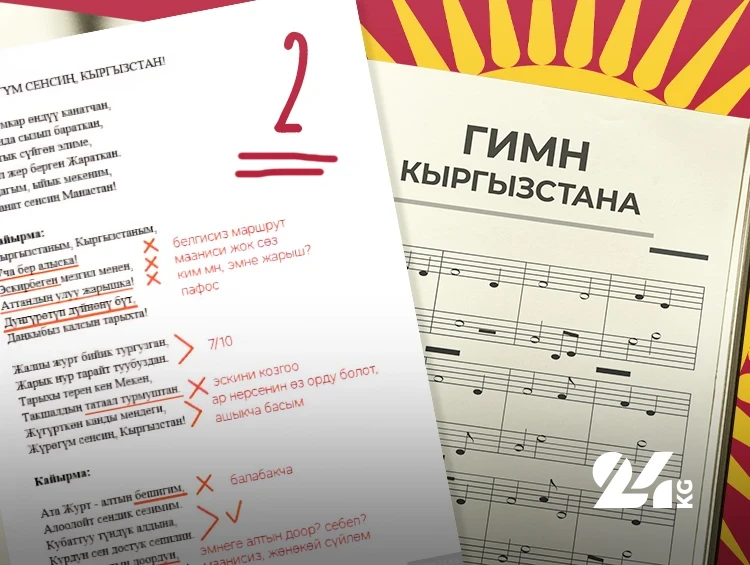

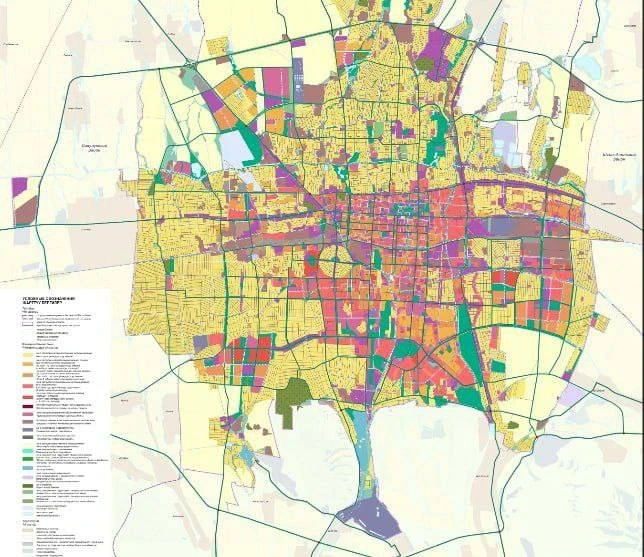

In the study, a system that converts text to images was combined with a system that converts images to text. These systems operated in a cycle of "image—description—image—description," and despite the variety of initial prompts, they quickly converged on a narrow set of common visual themes, such as urban landscapes, majestic buildings, and pastoral scenes. Surprisingly, the system soon "forgot" the original prompt, resulting in images that looked aesthetically pleasing but were devoid of meaning.

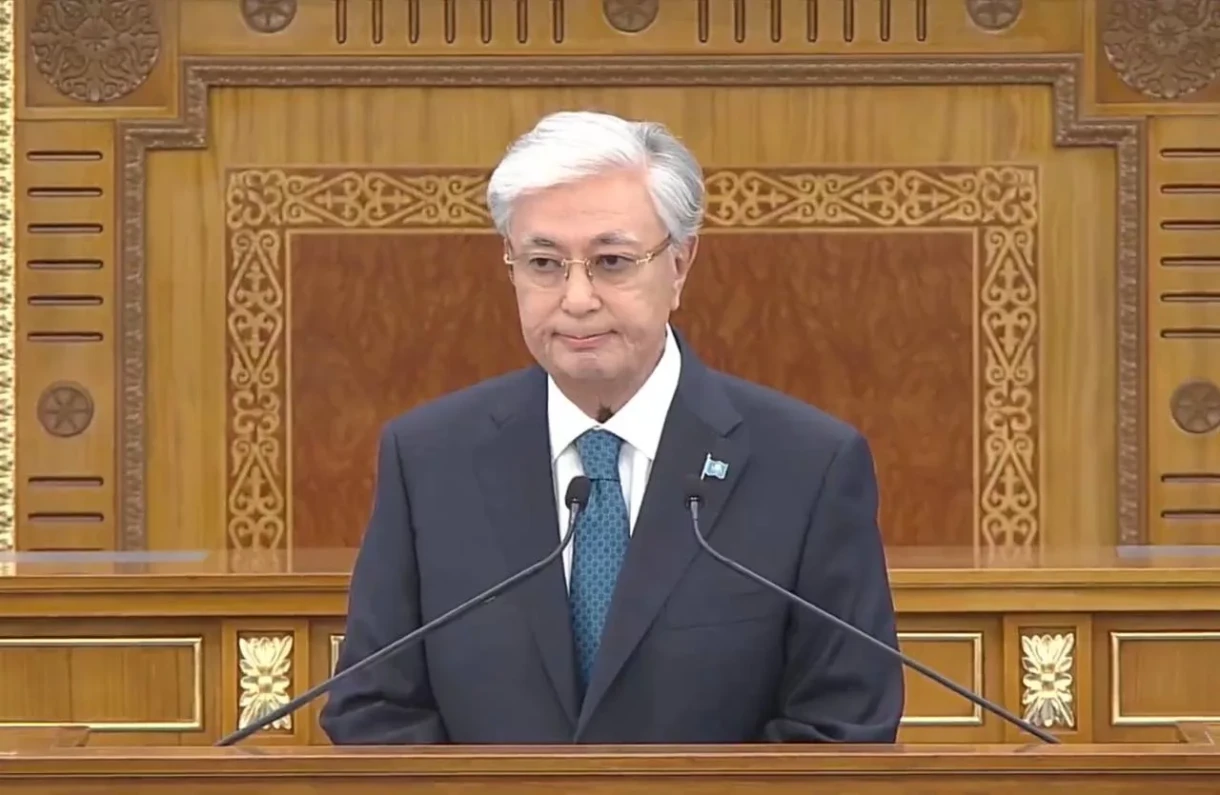

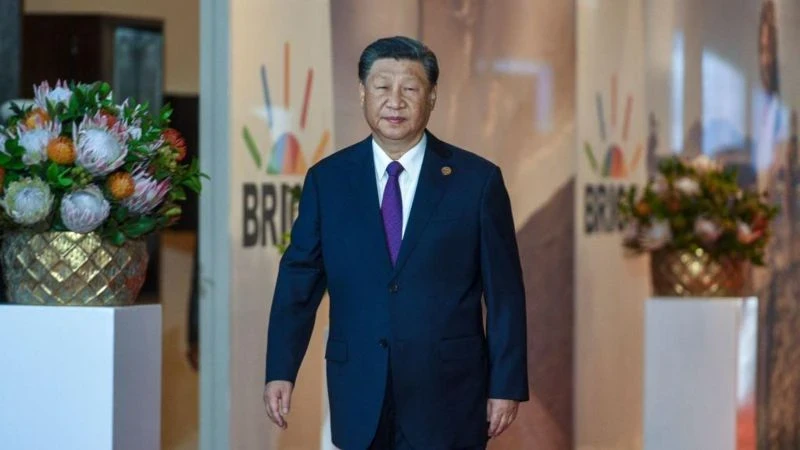

The experiment began with a description, for example: "The Prime Minister analyzes strategic documents to convince the public of the need for a fragile peace agreement." The AI then added a caption to the image, which was used as a prompt for creating the next image. Ultimately, the researchers obtained a rather dull image of a formal interior—without people or dynamics, lacking a sense of time and place.

These results show that generative AI systems, when operating autonomously, tend to homogenize. Moreover, it can be assumed that they are already functioning this way by default.

Standardization as Familiarity

Although this experiment may seem insignificant, most users do not expect AI to endlessly generate and describe its images. The simplification to standard images occurred without retraining: no new data was added, and the problem arose solely due to reuse. However, the experiment can be viewed as a diagnostic tool, demonstrating how generative systems operate without intervention.

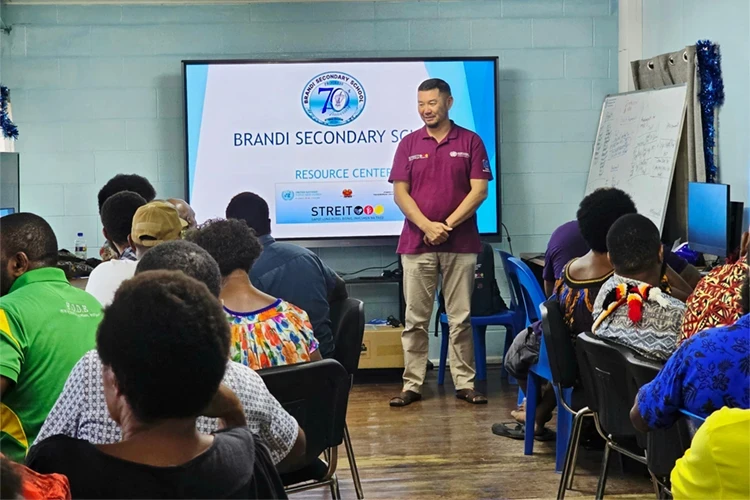

These results could have a significant impact on contemporary culture, as such systems increasingly influence content creation. Images are turned into text, text into images, and content is filtered and reprocessed as it transitions from words to images and videos. Currently, many articles on the internet are written by AI, and even when humans are involved, they often choose AI-generated options rather than starting from scratch.

The indicators from this study suggest that by default, these systems tend to reduce meaning to what is familiar and easily reproducible.

Stagnation or Acceleration of Culture?

Some critics argue that generative AI could lead to cultural stagnation by filling the internet with synthetic content on which future AI systems will be trained. According to this view, the recursive cycle will eventually lead to a decrease in diversity and innovation.

Proponents of new technologies, however, argue that such concerns about cultural decline arise with every new technology. They are confident that final decisions in creative matters will always remain with humans.

These discussions lack empirical data that could demonstrate exactly where homogenization begins.

And while the new study does not focus on retraining using AI-generated data, it reveals a deeper issue: homogenization occurs even before retraining begins. The content generated by generative AI systems during autonomous and repeated use is already compressed and universal. This changes the understanding of stagnation. The risk is not only that future models may be trained on AI-generated content, but also that AI-mediated culture is already filtered in such a way that preference is given to the familiar and conventional.

Not a Moral Panic

Skeptics are right about one thing: culture has always adapted to new technologies. For example, photography did not destroy painting, and cinema did not displace theater. Digital tools have opened new avenues for self-expression. However, earlier technologies did not force culture to endlessly transform across various mediums on a global scale. They did not generalize, generate, or rank cultural products—be they articles, songs, memes, academic papers, or social media posts—millions of times a day, based on the same notions of what is "ordinary."

The experiment showed that with repeated cycles, diversity diminishes not due to malice, but because only certain types of meaning are preserved when converting text to images and back. This does not mean that cultural stagnation is inevitable. Human creativity is resilient, and various institutions, subcultures, and artists have always found ways to resist homogenization. However, the risk of stagnation is real if generative systems are left in their current state.

This study also debunks the myth of AI creativity: generating numerous variations does not equate to creating innovations. A system may generate millions of images but only explores a small fragment of cultural space.

For something new to emerge, it is necessary to develop AI systems that strive for deviations from the norm in culture.

The Transition Error

With each captioning of an image, some details are lost. This also occurs when creating an image from text, regardless of whether the task is performed by a human or a machine. In this sense, the convergence that has occurred is not a unique problem of AI, but a reflection of a deeper property—the transition from one medium to another. When meaning repeatedly passes through two different formats, only the most stable elements are preserved.

The authors of the study show that meaning is processed within generative systems with a tendency to generalize, highlighting what is retained during the repeated transformation of text to images and back.

The conclusion is not encouraging: even with human involvement—whether in writing prompts, selecting, or refining results—these systems still cut off some details and amplify others, focusing on "average" metrics.

If generative AI is to enrich culture rather than diminish it, systems must be organized in such a way as to prevent the quality of content from declining to statistically averaged results. Deviations should be encouraged, and less common forms of self-expression should be supported.

The study clearly demonstrates that without such measures, generative AI will continue to produce mediocre and uninteresting content. Cultural stagnation is no longer just a hypothesis; it is a reality.

Source