AI can change your political views

Recent studies have shown that a brief conversation with a trained chatbot was four times more effective in persuasion than traditional television advertising.

Authors of the study: Stephen Lee Myers and Teddy Rosenbluth

Chatbots, known for their ability to assist in vacation planning and fact-checking, can also influence users' political preferences.

According to two studies published in the journals Nature and Science, even minor interactions with an AI chatbot can change people's views on candidates or political issues. One study found that communication with such a bot was nearly four times more persuasive than the television advertising used in the last U.S. presidential election.

These results highlight the growing importance of AI in political campaigns, especially in light of the upcoming midterm elections in the U.S. next year. "This will become an important part of new technologies in political processes," noted David G. Rand, a professor of computer science and marketing at Cornell University, who participated in the research.

During the experiments, researchers used commercially available chatbots, such as ChatGPT from OpenAI, Llama from Meta, and Gemini from Google. Their task was to persuade participants to support a specific candidate or political topic.

With the rise in popularity of chatbots, concerns have emerged about their potential use to manipulate voters' opinions. While many strive to remain politically neutral, some, like the Grok bot embedded in X, may reflect the views of their creators.

The authors of the article in Science warn that advancements in AI could provide "influential players with a significant advantage in persuasion."

The study found that the chatbot models used often tended to distort the truth and provide unverified data. Analysis showed that bots supporting right-wing politicians were less accurate than those advocating for left-wing ones.

In an article published in Science, researchers highlighted interactions with nearly 77,000 voters in the UK on more than 700 political issues, including tax and gender topics, as well as relations with Vladimir Putin.

In the Nature study, which involved respondents from the U.S., Canada, and Poland, chatbots were tasked with convincing people to support one of two candidates in the 2024-2025 elections. In Canada and Poland, about one in ten respondents admitted that conversations with AI changed their opinion about the candidate, while in the U.S. this figure was one in 25.

In one conversation, a chatbot, speaking with a Trump supporter, mentioned Kamala Harris's achievements, such as creating the Bureau of Juvenile Affairs in California and promoting the Consumer Protection Act. It also pointed out tax violations by the Trump Organization that cost the company $1.6 million.

After this, the Trump supporter expressed doubts, writing: "If I had doubts about Harris's reliability, now I really believe her and might vote for her."

The chatbot persuading support for Trump also proved to be quite convincing. It explained Trump's commitment to tax cuts and deregulating the economy, which impressed the Harris supporter. "His actions, though with different results, show a level of reliability," the bot added.

The Harris supporter admitted: "I should have been less biased against Trump."

Political technologists are eager to effectively use chatbots to influence skeptical voters, especially in the context of deep partisan divides.

Ethan Porter, a misinformation researcher at George Washington University, noted that outside controlled conditions, it would be difficult to convince people to interact with such chatbots.

Researchers hypothesized that the high quality of the chatbot's arguments makes them persuasive, even if the facts may be inaccurate. When testing this hypothesis, it was found that the absence of facts reduced persuasiveness by half.

These findings contradict the common belief that people's political views are resistant to change in the face of new information. "There is a belief that people ignore facts that are unacceptable to them," noted Rand. "Our work shows that this is not entirely true."

Original: The New York Times

Read also:

In the UK, an investigation is underway into the behavior of the chat bot Grok, created by Elon Musk, that "undresses" real people.

The UK regulator Ofcom has initiated an investigation into the platform X, owned by Elon Musk, due...

Voting - a right or a duty? The controversial bill of the deputy

Deputy Marlen Mamataliyev has proposed making participation of citizens of Kyrgyzstan in elections...

Nelly Nosalik: Advertising on Social Media - The Most Promising Direction

In Kyrgyzstan, there is a growing interest in women's entrepreneurship in the field of...

The Grok Chatbot Will Be Added to the Pentagon Network

“Soon we will have leading global AI models in every unclassified and classified network of our...

The President of Chile is José Antonio Kast, a supporter of dictator Pinochet

José Antonio Kast, representing the far-right political force, has been elected as the President...

Aeon: How the Conquest of Foreign Territories Came to Be Considered Unacceptable

Author: Cary Goettlich In the modern world, there are fewer and fewer things that evoke consensus...

The J-1 Cultural Exchange Program in the USA has turned into a scheme for profiting from and exploiting foreign interns, - The New York Times

Another scheme involved employing close relatives of the CEO, which brought his family over 1...

Problems of Diagnosing Hip Joint Dysplasia in Kyrgyzstan. Archival Interview with Kasymbek Tazabekov

In Kyrgyzstan, orthopedic diseases remain one of the key medical problems. According to the...

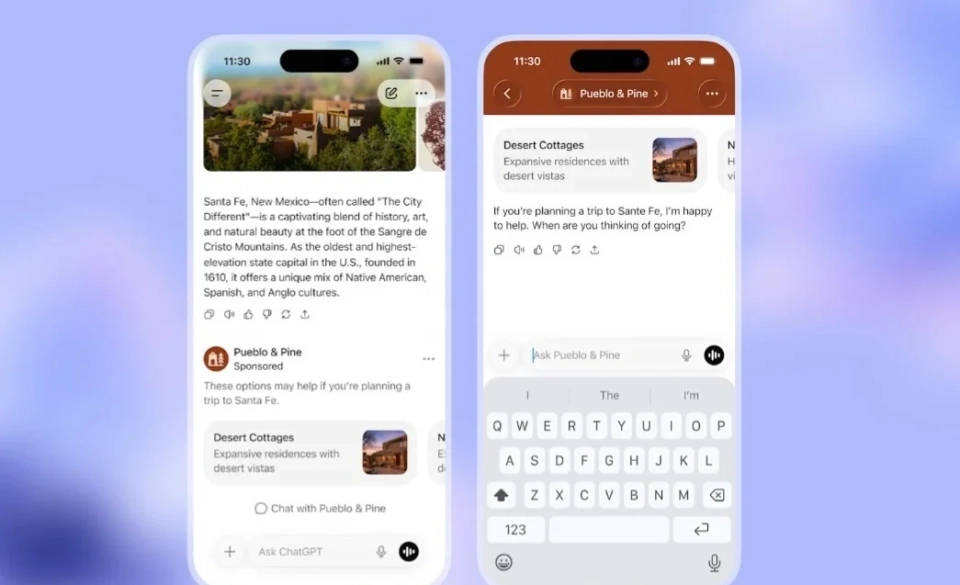

OpenAI Launches Ads in the Free Version of ChatGPT

The company OpenAI has announced the start of testing advertisements in its product ChatGPT. Ads...

Bloomberg: OpenAI will start testing advertising in ChatGPT

According to information provided by Bloomberg, OpenAI will begin testing advertising in ChatGPT,...

Non-Combat and Unrecognized: Suicides in the Ukrainian Army That Are Silent

This is a translation of an article from the Ukrainian service of the BBC. The original is...

Nostradamus' Prophecies for 2026: King Donald Trump, the Decline of the West, Widespread War, and AI

As 2026 approaches, interest in Nostradamus' "Prophecies" — the famous collection...

Which social network is the most popular in Kyrgyzstan and what do Kyrgyz people search for on YouTube?

In the report by the analytical company Kepios titled Digital 2026: Kyrgyzstan (with data as of...

The Untouchables. How the Son of a Nazi and a Pinochet Fan Came to Power in Chile

The elections in Chile, which concluded in December with the triumph of the far-right politician...

How Crypto Miners Stole $700 Million from People, Often Using Old Proven Methods

The theft of cryptocurrency evokes a particular, agonizing feeling. All transactions are recorded...

Consciousness Can Move Through Time — This Means That "Intuitive Feelings" Are Memories from the Future

That night, I was a little girl. This strange phenomenon that I experienced became one of the...

Why the U.S. Should Include the Turkic States Organization in Its Policy Toward Central Asia - The National Interest

The visit of Kubanychbek Omuraliev, the Secretary General of the Organization of Turkic States, to...

The head of the White House staff stated that her sharp comments about Musk and Vance were taken out of context.

On December 16, Vanity Fair published the first part of an extensive article based on numerous...

Tokayev: Kazakhstan has entered a new stage of modernization

Curl error: Operation timed out after 120001 milliseconds with 0 bytes received...

Bloomberg: Elon Musk Demands Compensation from OpenAI of Up to $134 Billion

According to information provided by Bloomberg, Musk and his legal team claim that OpenAI deceived...

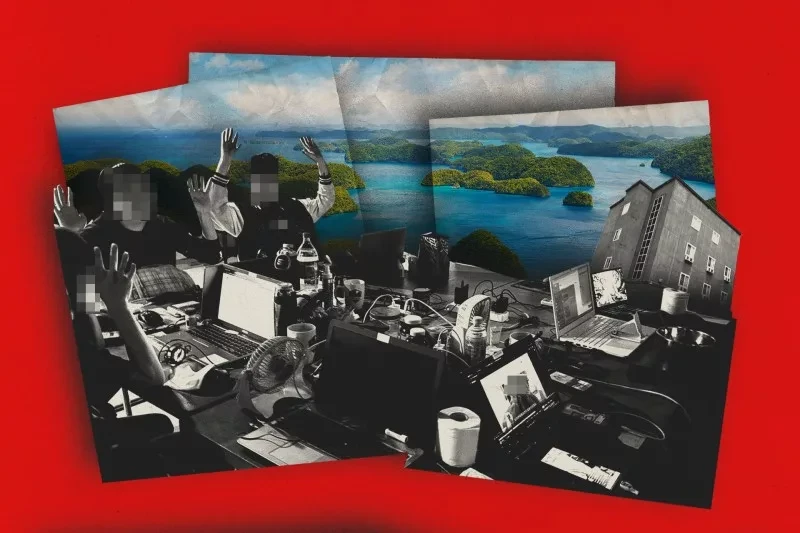

Foreign Workers, Local Sponsors: How Cyber Fraud Schemes with Hotels in Palau Are Organized

New data reveals the mechanisms of operation of two alleged fraudulent centers in Palau, a small...

How "Eurasia" is Changing the Daily Lives of Millions in Kyrgyzstan

Curl error: Operation timed out after 120001 milliseconds with 0 bytes received...

Alibaba's Head: China's Chances of Surpassing the U.S. in AI Are Less Than 20%

At a recent conference in Beijing, artificial intelligence experts from China presented their...

Mobicom officially launches mobiAI, an advanced AI chatbot

The new mobiAI assistant is designed to simplify user interaction with technological innovations,...

Did Trump Exchange Taiwan and Ukraine for Venezuela?

Analyzing recent events, Jaykhun Ashirov reflects on why the theory of a conspiracy between the...

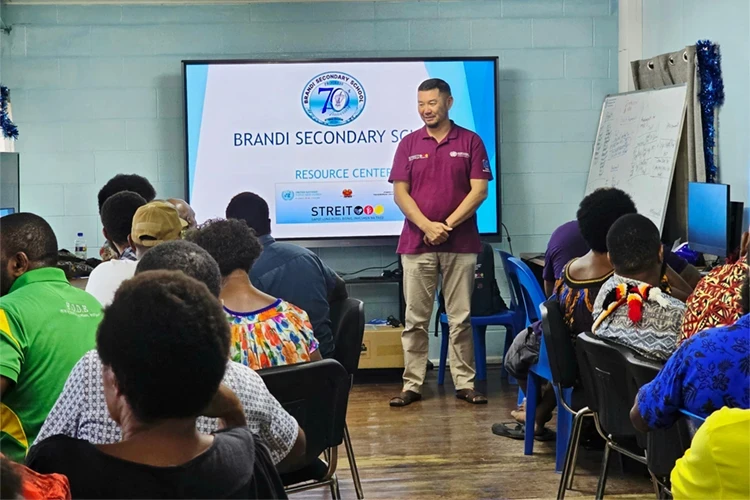

Like Another Planet. A Kyrgyz Person on Life in Papua New Guinea and Working at the UN

Kанагат Алышбаев, a native of the village of Sary-Kamysh in the Issyk-Kul region, holds a degree...

Ways to Contact Pinko Support: Online Chat, Email, and Phone

Online Chat: Instant Answers at Pinco Casino Azerbaijan The most effective and popular way to...

What the Labor Market in Kyrgyzstan Was Like in 2025: Salaries, Job Vacancies, Regional Rankings

The labor market is a reflection of social and economic processes, and analysts from the platform...

The Ministry of Health instructed to create a database of unscrupulous participants in the pharmaceutical market and to strengthen control over drug procurement.

The Ministry of Health of Kyrgyzstan has announced its intention to create a registry of...

Sadyr Japarov spoke at the IV People's Kurultai (text of the speech)

Today, December 25, the President of Kyrgyzstan, Sadyr Japarov, addressed the people, the deputies...

Is Kazakhstan Awaiting a Water Collapse Following the Iranian Scenario?

The inability of the new Ministry of Water Resources to address the water shortage problem is...

What is happening with green cards? Should we expect the lottery? And what about those who have already won it?

The U.S. Green Card Lottery (Diversity Visa Lottery) has long served as one of the most accessible...

Tokaev gave a major interview to the Turkistan newspaper. It covers reforms, AI, nuclear power plants, Nazarbayev, and much more.

President Kassym-Jomart Tokaev shared his views on current challenges and achievements in his...

Life in the Regions: A Bullet in the Seat of a Honda — How One Detail Helped Investigator Kurmanbek Kanybek Uulu from Osh Uncover an Organized Crime Group

Kurmanbek Kanybek uulu — an investigator of the control and methodological department of the...

US sanctions kill half a million people a year worldwide

In a study conducted by scientists from the University of Denver and the Center for Economic...

"We will ensure his election as the 48th president of the USA." The widow and supporters of Charlie Kirk backed J.D. Vance.

At the event, Erika Kirk, speaking before more than 30,000 supporters, stated: “We will ensure...

Cinema as a Tool for Human Rights Protection: An Interview with Swiss Documentarian Stefan Ziegler

At the end of 2025, a ceremony for the "Ak Ilbirs" award took place at the National Opera...

Zelensky spoke about the 20 points of the peace plan. What's new in it?

President of Ukraine Volodymyr Zelensky presented a draft agreement for ending the war, which was...

"War Will Change Beyond Recognition." Colonel of the General Staff of Russia — on the Lessons of Military Actions in Ukraine, Changes in the Army, and the Weapons of the Future

The conflict in Ukraine has not only become a catalyst for changes in the military sphere but has...

A Year of Turbulence and Pragmatism. What 2025 Will Be Remembered For and What to Expect in 2026

The outgoing year 2025 was marked by significant global turbulence and a new perspective on...

Canada fears it may become Trump's next target after Venezuela and Greenland

Canada expresses concerns that it may become the next target for Donald Trump's presidential...

In Bishkek, the results of the media campaign for the 100th anniversary of the women's movement in Kyrgyzstan were summarized

On December 25, a presentation of the results of a nationwide media campaign dedicated to the 100th...

China is concerned that AI threatens the power of the Communist Party and is trying to rein it in

Chatbots pose a particular threat, as their ability to generate their own responses may encourage...

Why Tourists Need Almaty, Not New York, Moscow, or Paris

Kassym-Jomart Tokayev, in an interview, emphasized the need to transform Almaty into a unique...

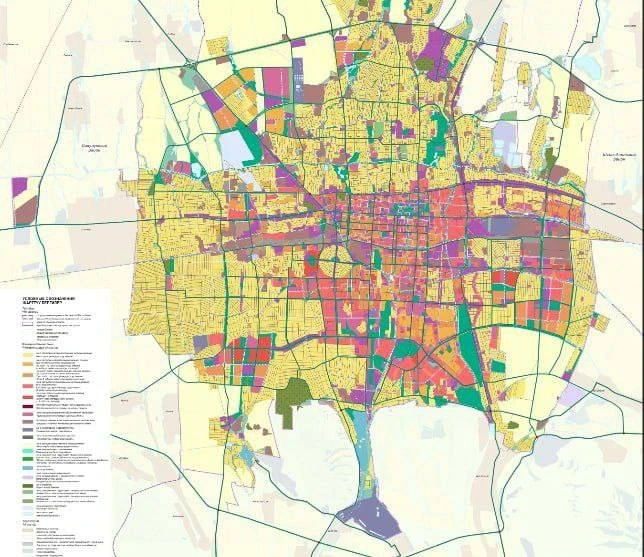

Bishkek Master Plan: Whose Homes and Lands May Be at Risk of Changes

The Bishkek City Hall presented responses to questions and suggestions from residents that were...

For the first time, a scientist from the Kyrgyz Republic has been awarded the Russian Government Prize in the field of science

Since 1994, the government of Russia has annually awarded prizes in the fields of science and...

Conversation while driving slows down eye reactions, increasing the risk of accidents

Recent research conducted by scientists from Fujita Health University has revealed that...

The deputy proposes to punish persistent election non-voters and reward those who vote. Project

Deputy Marlen Mamataliyev has once again proposed a draft law for public discussion, which implies...