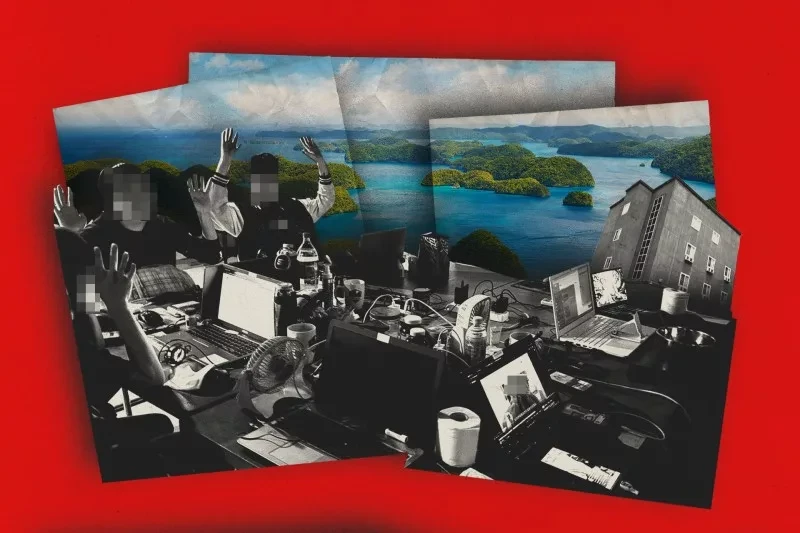

According to a new study conducted by UNICEF in collaboration with the international organization ECPAT and Interpol, over 1.2 million children in 11 countries reported that their images were used to create explicit sexual fakes last year. In some regions, every 25th child was affected, which corresponds to one student in an average classroom.

Children are aware of the risks associated with using AI to create fake sexual images. In the countries where the research was conducted, up to two-thirds of surveyed children expressed concern about the potential use of AI to create fake sexual materials. The level of concern varies significantly from country to country, highlighting the need for increased awareness and the implementation of preventive and protective measures.

It should be clarified that sexualized images of children created using AI fall under the category of child sexual abuse materials (CSAM). The abuse of deepfakes is a serious problem that causes real harm.

The use of images or identities of children makes them immediate victims. Even if there is no possibility of identifying the victim, materials created using AI contribute to the normalization of child sexual exploitation, create demand for violent content, and hinder law enforcement's ability to identify and protect children in need of help.

UNICEF positively assesses the efforts of AI developers who strive to implement safety and protection measures to prevent the misuse of their technologies. However, many AI models continue to be developed without appropriate safeguards. The risks are exacerbated when generative AI tools are integrated into social networks, facilitating the rapid spread of manipulated images.

The organization calls for the following actions to combat the growing threat posed by AI-generated child sexual abuse materials:

- All governments should expand the definition of child sexual abuse materials (CSAM) to include AI-generated content and criminalize its creation, storage, and distribution.

- AI developers should implement safe approaches at the development stage and reliable protective measures to prevent the misuse of their models.

- Digital companies should develop mechanisms to prevent the spread of AI-generated sexual materials and improve content moderation so that such materials can be removed immediately rather than days after a victim's report.

The harm from the abuse of deepfakes is real and requires immediate action. Children cannot wait for laws to come into effect.